Spotify's Dilemma Points to Deeper Tech Challenges

I’m going to start turning my Twitter threads into a newsletter that will let the ideas I play with there have a bit more breathing room. Considering I work in communications and am studying strategic communication for my PhD, it seems like a good fit, no? Below, I combined two threads I wrote about Spotify and Facebook into one, about why companies need to be more mindful of the harms their products cause. Let me know what you think, if you like it or hate it. And in the meantime, consider signing up.

Spotify’s Harmful Choice

When Spotify CEO Daniel Ek announced that the service will be adding a label pointing to health resources on podcasts that discuss COVID-19, he was pulling from an old playbook, one well-trodden by other tech companies who want us to believe they’re a platform when they’re really media production companies.

At issue is Spotify’s star podcast host, Joe Rogan, using his massive listenership to spread misinformation about the pandemic: dangerous false cures (ivermectin), unfounded skepticism of mRNA vaccine safety, and amplifying loony anti-tax types.

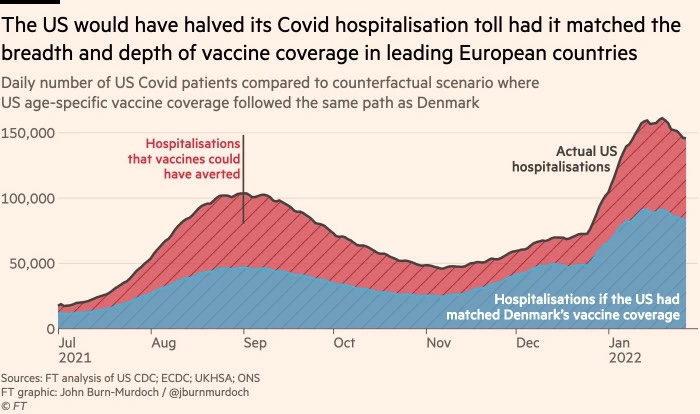

It may not be immediately clear why this would matter so much – after all, shouldn’t the principle of free speech mean he can say whatever he wants on his radio show? – but in the case of a deadly pandemic this has serious life and death consequences. A recent study at the Financial Times showed in stark detail what lower rates of vaccination have done to the U.S.

This huge gap of lives that vaccines could have saved is why, several weeks ago, hundreds of doctors and scientists begged Spotify to do something to address Rogan’s show being an engine for anti-vaccine activists: refusing vaccines is literally killing people: hundreds of excess deaths per day.

Really, the scale of the ongoing death toll from this illness is enormous. It is so large I don’t think people are able to even process the death anymore – after all, what does it mean to have 900,000 people die over 2 years? But its is death on a massive scale anyway, far more than any sort of crime people are expressing fear about right now.

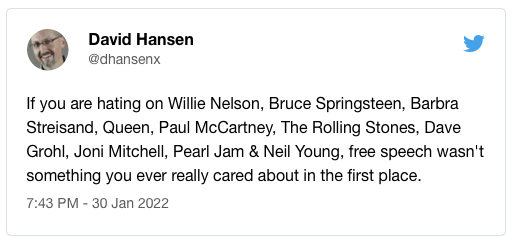

In response to the uproar over Rogan’s anti-vaccine misinformation, an even broader array of people than just scientists and doctors have spoken up: people who actually use Spotify to reach their fans. Neil Young is now only one of many artists who are fiercely attacking Spotify for its paid association with dangerous misinformation. And just on queue, the response to a company and celebrity facing criticism for their speech is to defend the speech and delegitimize the criticism.

Quite a generation gap in artists, eh?

It is a mistake accept at face value their claim that they “don’t censor” their creators. Every platform has, from its beginning, a degree of censorship whether it’s nudity, graphic violence, antisemitism.

In Spotify’s case, they have always restricted some forms of content in their podcasts and music streams, both through a refusal of association (e.g., not signing/hosting a show) or through refusing specific content types. You can see the pre-Rogan list to get an idea of what it says: basically, don’t do hate speech and don’t infringe on copyright. It’s barebones, but it is there: Spotify already censored creators and artists on its platform. So, that’s not a viable excuse for refusing to take action against dangerous vaccine misinformation.

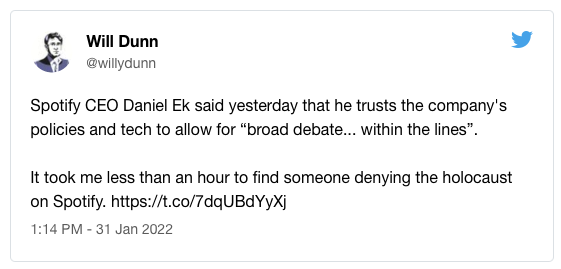

Plus, Spotify is really bad at enforcing even that bare minimum standard of content. It is unreasonable to expect platforms to be airtight, but the way in which they design and allocate resources around banned content says a lot about what they value and what they don’t. In this case below, it is extremely difficult to even report infringing content, and Spotify provides no mechanism to follow up on a complaint.

So, when Spotify CEO Daniel Ek says “it is important to me that we don’t take on the position of being content censor,” he is being very misleading in a plain spoken way. Spotify has always reserved the right to content-censor whenever it wants and has long had rules about what they will restrict (even if it’s weak and never enforced).

Put more simply: Spotify has always made decisions about what they will allow on their platform and what they won’t. They also make decisions about what they feel like enforcing, and what they don’t – and how available they will make enforcement mechanisms. As of early 2022, a vaccine-preventable COVID-19 pandemic has killed between 5.5 and 6 million people, 900,000+ of which are in the US alone, and Spotify spent $100 million to hire a vaccine denier (and flagrant “edgy” “ironic” racist) to buff up its new business line. It’s a bad look, but one they’re counting on to continue to grow in an investor-pleasing way.

Crucially, this is a choice they are making. Spotify bet that Rogan’s vast listenership will outweigh any social opprobrium that hosting him will incur. They are choosing to ignore the harm their platform creates because it’s good for their bottom line. It is a common stance for tech companies to make, even when they’re not from Silicon Valley (Spotify is Swedish).

What is going to be interesting now is that Facebook’s endless “we know we fell short” nonsense has created deep cynicism in the public about weasel statements from a platform knowingly hosting dangerous and harmful content. The playbook doesn’t work anymore. So Spotify has a choice, just like their content choices: they can lean into being a platform for far-right, ant-vaccine, pro-“race critical,” transphobic podcast hosts, or they can be a general platform. They want to be seen as the latter, but seem to think the former is safer.

A Broader Pattern of Ignoring Harm

Recently, a woman claimed that a gang of men in a VR system owned by Facebook (now “Meta”) surrounded her avatar and groped her.

It is easy to make fun of this, because far too many people (especially older people) inexplicably think that things online aren’t “real” so therefore they don’t really matter. This is wrong. People invest a lot of personal worth in their avatars, and always have. When you use a system like Meta’s metaverse to make them into literal extensions of the self, controlled through a headset you cannot see outside of, violating a user’s consent with their personal avatar is extremely harmful.

There is a long history of men abusing women in multiuser online spaces. In the early 1990s, before the invention of the World Wide Web where you’re reading this newsletter right now, people played games and engaged in social behavior in shared online spaces called MultiUser Dungeons, or MUDs. Users referred to these user rooms as “virtual reality” and felt real connection toward their users and avatars, and imbued them with personal attributes as extensions of themselves. Extensive research has shown this emotional bond to be real and to have real world consequences when something happens to the avatar.

And yet, in the early 1990s administrators struggled to address harassment. Many online spaces were assumed male because of how men displaced women in the 1980s computer industry. So, when a woman (or someone coded as female) would use a server, male players would sometimes force the female-coded avatars into sexually charged situations against their consent. For the last 30 years, the pattern of people behaving similarly across systems, platforms, and products has shown this to be a major challenge to multiuser environments.

Julian Dibbell wrote about this in a famous Village Voice article, “A Rape in Cyberspace,” which explored a particularly upsetting incident 1993. Male players in the LambdaMOO (the oldest multiplayer online game, launched in 1990), used a game feature called a voodoo doll, which let players control another player’s avatar. A group of male players used the voodoo doll to immobilize and surround a female player against her consent, and simulated a violent sexual assault against her. She complained to the administrators of the game, and they didn’t know what to do.

This “rape in cyberspace,” and the victim certainly experienced it as a rape, happened in 1993. 29 years ago. Since the very start of online multiuser environments, sexual abuse has been a known problem in every product that features strangers talking in an online shared space. Literally decades of product launches show how much of a problem it is.

So, why the HELL are products still being launched without safety mechanisms to protect users? Why is Spotify just blithely buying up podcasts hosts without any consideration to how their lies about medicine and science have led to preventable death? Why isn’t this is a bigger concern?

Spotify’s negligence of product harm follows in a long tradition of Silicon Valley “move fast and break things” growth hacking. Meta in particular is infamous for a blithe ignorance of how its products can be misused. That they’re pushing ahead with “haptic” gloves, the question of how to protect users from unwanted groping and other forms of assault becomes MORE important, but as an organization they stay silent about what challenges there are and how they plan to keep users safe.

The rapid push into new, immersive computer experiences without considering user safety - after literally decades of experience showing safety is important - is not just irresponsible. It is immoral. Meta is building a rape machine with its metaverse, and has said nothing to assuage the public that it will take safety more seriously there than it has on Facebook. Spotify is aiming to take over the podcast space (they still stand a very good chance of being the dominant podcast platform), but they are doing so without a plan for how to minimize the harms podcast hosts can cause in society.

The half-hearted apologies proffered by Spotify and Rogan don’t mean anything. They are both pretty obviously insincere (especially Rogan’s “sorry you got angry” approach). But the bigger issue here isn’t contrition, it is that huge, billion dollar global companies keep launching products that a few seconds of thought should highlight will cause incredible harm. And that harm just doesn’t factor strongly enough into the product design and launch. Instead, it is shoved off onto the communications team, who are forced to do damage control for a problem they didn’t create.

That’s bad for us. Extremely powerful corporations being able to act with impunity for the sake of a marginal gain in net-revenue is not a civic value I recognize from the foundational documents of a rights-protecting, liberal democracy. No one can vote Spotify or Facebook into behaving better. There is no recall mechanism if enough people are fed up with Ek or Zuckerberg behaving so negligently toward the harm they unleash in society.

I wish I had answers to this problem, but I just don’t. I don’t know how to put the genie back in the bottle. Maybe some of you do? If so, let’s start a conversation.