Pitfalls of Using AI in Research

My preferred qualitative data analysis software, Atlas.ti, recently announced a new product: automated coding, powered by OpenAI. I’m shocked at how badly thought out it is. Let me explain.

Qualitative research rests on a couple of principles to ensure rigor and validity. It is fundamentally interpretive, meaning it is about applying analytic judgment to something that isn’t very quantifiable, with the aim of generating insights into attitudes, beliefs, motivation, and perspectives. A friend recently quipped that “all research is qualitative,” which he linked to an alarming tendency in quantitative circles to use shortcuts to avoid engaging in the deep thinking necessary to understand human behavior. He was referencing what I think is a profound insight that gets at some damaging pathologies in academia, but that’s beside the point — which is that qualitative research is, fundamentally, interpretative.

The data qualitative research uses would be familiar to a large language model. It involves interview data, transcripts, recordings, images, text, things like that. LLMs excel at cataloguing this sort of thing, since it makes up the data they are “trained” on. More on that in a minute.

There is a huge range of approaches to take when analyzing such disparate data sources. The three I am close to are below:

- Ethnography involves the long term embedding in a community as a participant-observer so the researcher can come to view the world through the eyes of the community she is studying. Think of how we typically envision anthropology working. This produces data like interviews, discussions, and field notes (which is like a diary on steroids, capturing mental state, observations, events, interpretations, and questions). Here is a fantastic book on how field notes generate understanding — they are rich texts on their own, and sometimes difficult to interpret without the full scope of experience the researcher brings to them. They are also iterative, and are updated in a transparent and documented process as the researcher gains more insight into the community.

- Grounded Theory uses inductive reasoning to generate insights based on collected data. Many people use it in conjunction with ethnography to form new insights into how communities behave and how individuals within those communities view and interpret the world. It is commonly used across social disciplines, from philosophy to anthropology to market research, since it follows from the evidence: you collect data, then interpret that data, and from that interpretation you form a theory to explain it. This is a mirror of “traditional” science (called positivist science), which starts with a hypothesis that is then tested using already existing theories, which are either validated or updated based on that testing. Grounded Theory instead generates theories from data, and does not test hypotheses. It is grounded in the data, instead of testing the data.

- Discourse analysis is the analysis of language, typically based in talk though not always. This has typically taken the form of observing (either in person or through recording) a set of people talking, and then applying rigorous principles to understand how the participants are applying signs, using tactics, and expressing meaning. Linguistic approaches to this method go deep, and are way beyond my capacity to even survey here. But communication scholars use this method to understand how people mediate their interactions, and when applied in a critical context (which is how I use the method) it generates insights into how power is expressed and adapted to through talk.

These approaches differ in how they try to understand people, phenomena, and analysis. Two common ways they can do this are phenomenology, which tries to understand the subjective experience of the people being studied, and constructivism, which engages with how the differing perspectives of researcher and community can influence the creation of theory to explain phenomena. At its heart, these different approaches are subjective — that is, they reject the idea of an objective social reality and instead seek to understand how different people approach and understand the world around them without imposing some outside assumption.

They also share one important thing in common: they generate huge amounts of text that requires intense labor to understand. This seems like an ideal use case for an LLM, right? After all, they’re designed to process large bodies of text. Not so fast.

Interpreting qualitative data is called Qualitative Data Analysis, or QDA. After data are collected, it needs to be organized. Organization is a process of interpretation: you have to apply judgment to why you place pieces of data together, why you separate categories the way you have, and so on. This should be documented through research memos, which are how researchers track their evolving understanding of the data and assists in ensuring rigor. Once the data are organized, they are then coded according to a set process — either the codes are determined ahead of time (if you are searching for something specific) or they emerge as you go, through a multistep process that involves refining and updating the coding terms to generate theory. Sections of text are highlighted and assigned an attribute. Finally, after the data are organized and coded, they are analyzed according to the criteria set by the researcher: whether trying to investigate a specific dynamic or to broadly understand what a community is like.

The organizing and coding process is a nightmare to do manually. Imagine having six months of ethnographic field notes, plus dozens of hours of interviews, and community texts — choosing how to organize that, and then keeping track of how every line is coded, sorted, and interpreted, is extremely laborious and time consuming. This is where QDA software comes in. I like how Atlas.ti does this, but others (like Nvivo, Dedoose, MaxQDA, etc.) are just as valid. These programs make it far quicker to organize and code data so a qualitative research project can take weeks or months, instead of years, to complete.

Here is where Atlas.ti’s decision to incorporate OpenAI comes in. Here is how they pitch it to users:

Say goodbye to tedious analysis tasks and increase your coding speed with our AI-driven assistance. We are proud to announce a groundbreaking step in the field of qualitative data analysis: AI Coding Beta – our new solution that makes full-automatic data coding a reality, fueled by OpenAI’s GPT model.

There are a few issues with this, issues that are so big I really don’t know how they can possibly justify rolling this out.

For starters, coding is interpretive. You have to decide where the codes begin and end, to say nothing of what they are and how they are defined. Codes change over time, codes get lumped and split as understanding changes, and exceptionally rigorous studies have more than one person coding so intercoder reliability becomes a part of the interpretation process.

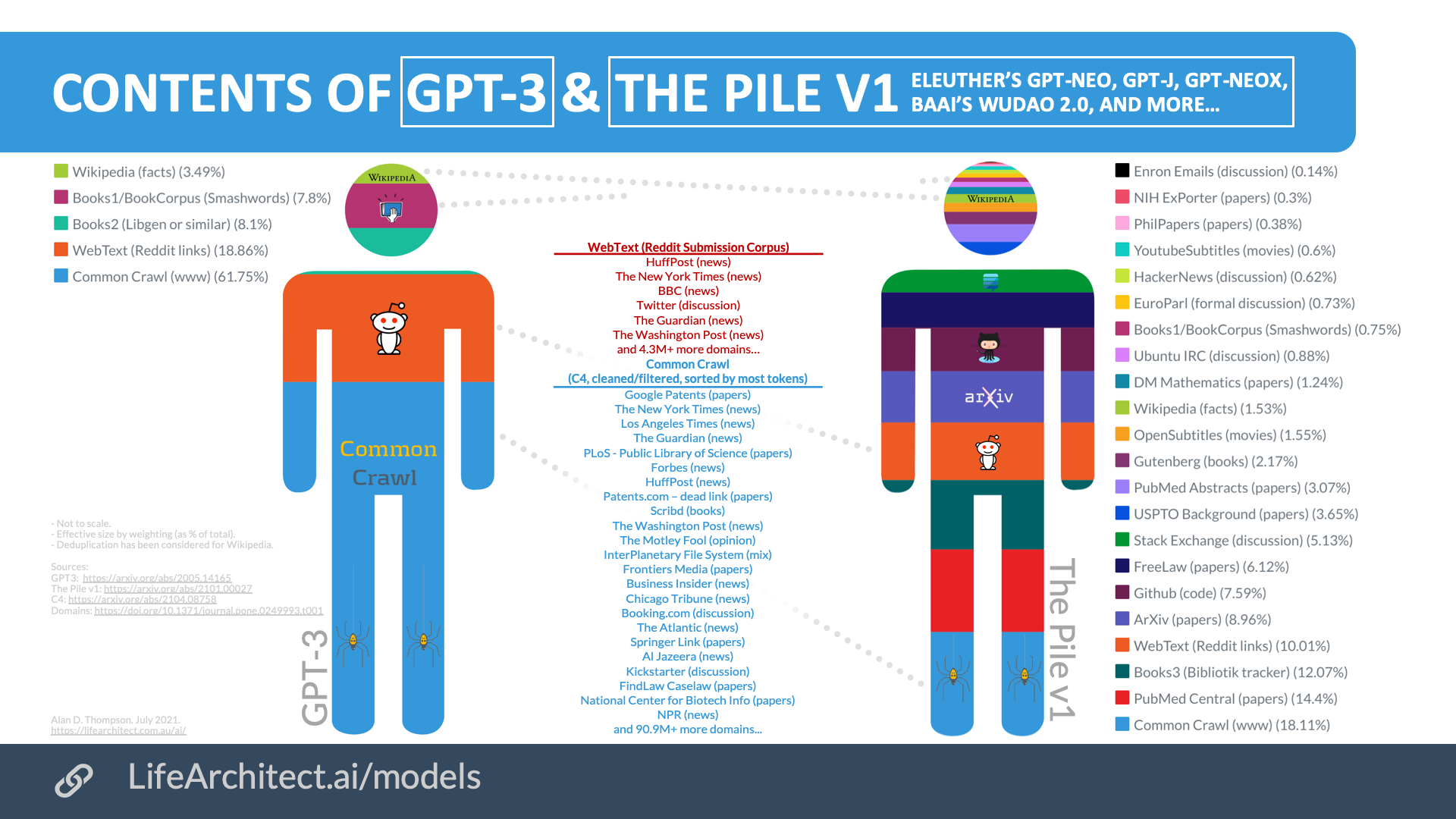

An LLM — especially OpenAIs, but this applies generally — is incapable of this work. I don’t mean they’re bad at it, I mean they’re incapable. Consider how OpenAI trained GPT-3 (the left hand glyph in this graphic):

If you look closely, you can see big chunks of it come from trawling the open web, Reddit, Wikipedia, and a self-publishing website. That is how OpenAI built its statistical model of what configuration of letters is the most likely acceptable answer in response to an input. It is one reason why its output is so stilted, tedious, authoritative, and wrong. The data it was trained on is flawed: heavily weighed toward rich English speaking white men in the West, limited only to people who posts things to the internet, and (with the libgen stuff) limited to what people wish to consume without paying copyright.

On top of this, GPT systems do not have semantic understanding. The acronym explains why: Generative Pre-trained Transformer. It does not interpret new information, in fact it interprets nothing. It transforms things its creators programmed to, and generates output based on parameters its creators do not share because they’re “trade secrets.” These systems “hallucinate” and make authoritative statements about things that don’t exist because they were trained on an extraordinary amount of confident bullshit, and makers like OpenAI did not bother to construct truth-checks before launching it onto the marketplace. It’s not even clear whether those are possible to make, period, since we don’t really know how semantic understanding works in the brain or whether a computer is even capable of duplicating that process.

Let’s put this another way: OpenAI knows these systems generate false or misleading output and they try, belatedly and long after, say, someone dies by suicide because of it, to introduce controls to filter the output to avoid the worst cases of malicious and false output. We do not know the process by which these controls were put into place, how they alter the system’s training or transforming, or how that might alter its weighting of outputs. The whole thing is opaque, shrouded in corporate secrecy, and they want us to just accept that they do a good enough job to incorporate it into the things we do.

Let me be clear, because this blogpost is too long already. Using any LLM to “automate” coding for qualitative research will make that research worse. The central ethos to QDA is transparency, documentation, and interpretation. LLMs do none of these things: OpenAI does not tell us how it trained its models, how (or whether) it filtered the data from somewhere like Reddit, does not provide documentation to users about how the GPT model arrived at an output, and is incapable of engaging in interpretation which is why it generates so much false output.

This morning, Atlas.ti sent out an email to users explaining that demand for its OpenAI implementation was so high it was slowing response times to the point of uselessness. I sincerely hope this is because people are just curious to see how it functions, and I look forward to the articles that will come out in a year or two testing how this system codes a corpus of text compared to a human.

But my objection to this is not based on whether it is “good enough” or not. It is bigger. Atlas.ti is marketing this as automating tedious tasks and speeding up the interpretative process — but an LLM cannot do those things with qualitative data. It does not apply contextual understanding to the text it is asked to summarize, it compares it to Reddit and Wikipedia and self-published books, and based on that comparison makes a guess as to what the new input looks like. That isn’t research. It is fake, a facsimile of thinking.

What this integration represents to me is an adaptation of the same dangerous analytic shortcuts that has damaged quantitative research, which is troubled by a massive replication crisis driven by bad experimental design meant to generate easily-calculated outputs, relentless p-hacking, data skewing, and calculation shortcuts that led to bad conclusions and worse policies based on them. “Automating” the interpretive process promises to bring those problems to qualitative analysis, which has until now had a deliberate and conscious process of systemic thinking as a bulwark against such shortcomings (qualitative research has other limitations, it’s not the everything of research, but that’s a bigger discussion).

The rush to bring these broken and unworkable systems to market is such a titanically bad idea I am shocked to see academics jumping on board. Companies like Google and OpenAI have a clear motivation to get a first mover advantage in the market. And I suspect that is part of why Atlas.ti is jumping on board: they can say they are the first, or whatever, to include COOL NEW AI TOOLS for their software, which they are trying to sell at scale to universities, students, and corporate researchers.

But that doesn’t make it a good idea. Placing an LLM between you and your data is not science. It is the opposite. And the “disruption” that it will cause will only harm us.